Building an AI Voice Agent to Navigate Insurance Phone Trees

Anyone who’s worked in healthcare administration knows the pain: calling an insurance company to check prior authorization status means 45-minute hold times, navigating byzantine phone menus, and repeating the same information over and over. It’s the kind of tedious, predictable workflow that’s ripe for automation.

I built a proof-of-concept to demonstrate what this could look like: an AI voice agent that autonomously calls insurance IVR systems, navigates the phone tree, provides member information, and extracts authorization status.

What I Built

The POC has four main components:

Mock Insurance IVR - A Twilio-powered phone system that simulates a real insurance company’s automated line. It walks through the typical flow: welcome message, department selection, member ID collection, date of birth verification, CPT code entry, and finally returns an authorization status.

Voice AI Agent - The brains of the operation. It listens to prompts, decides what DTMF tones to send or what information to speak, and tracks where it is in the conversation flow.

Backend API - Express server with SQLite storing member records, prior authorizations, and call logs. Manages the state machine for each active call.

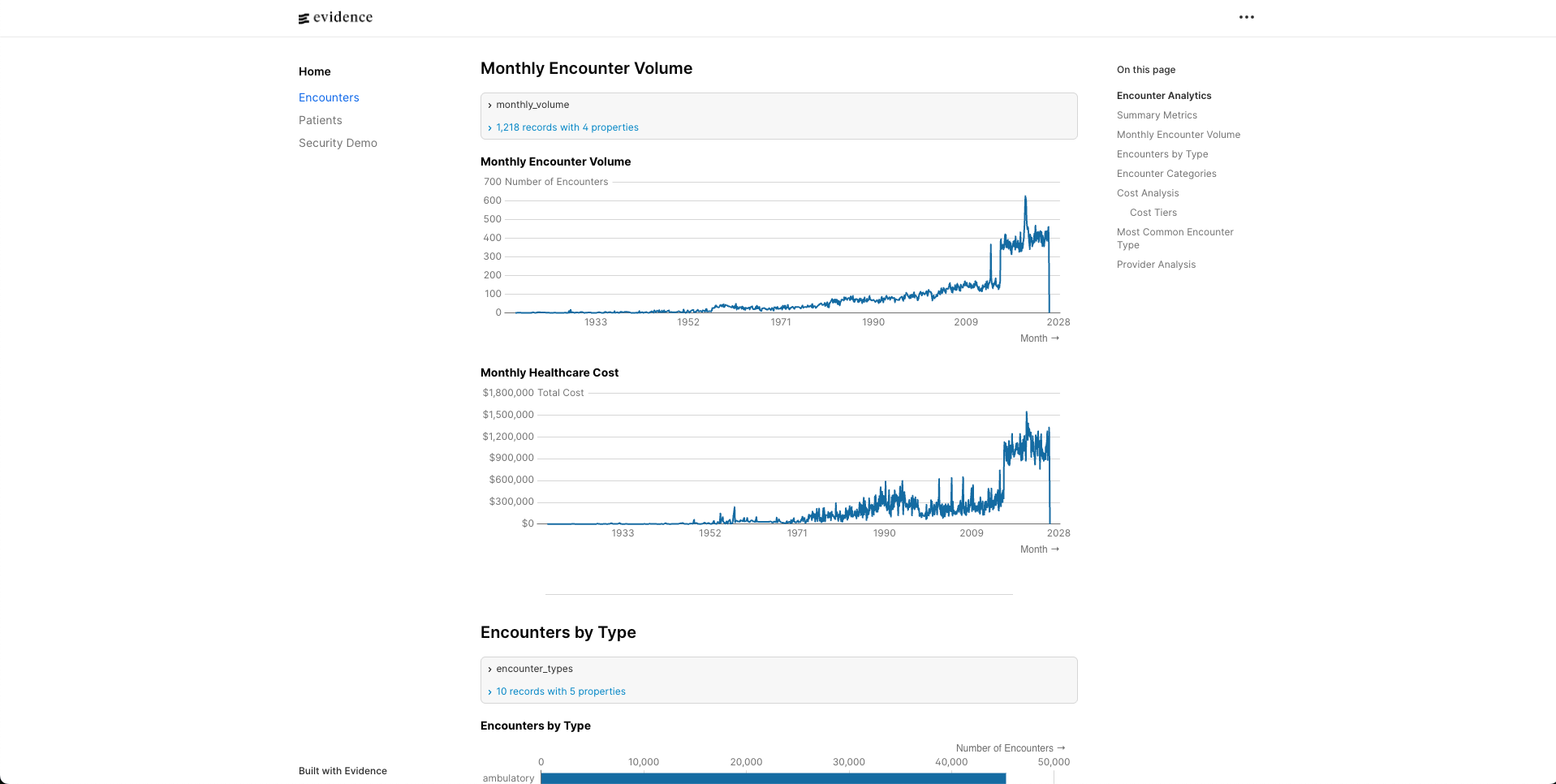

Dashboard - React UI where you select a member, initiate a call, and watch the results come back in real-time.

The demo flow: select a member from the dashboard, click “Check Authorization”, watch the agent dial the mock IVR, navigate through menus, provide the member’s information, and display the extracted authorization status.

Architecture Decision: Webhooks vs Streaming

The first real decision was architecture. There are two main approaches to voice AI:

Real-time streaming (Pipecat, Deepgram, ElevenLabs): Your agent connects directly to audio streams, processing speech in real-time with minimal latency. This is what you’d want for natural conversations.

Webhook-driven (Twilio’s built-in STT/TTS): Twilio handles the telephony, transcribes speech, calls your webhook with the text, you respond with TwiML instructions. Higher latency (~1-2 seconds) but far fewer moving parts.

For a POC demonstrating IVR navigation, I chose webhooks. The latency is fine when you’re interacting with a phone tree that’s waiting for input anyway. And having Twilio handle STT, TTS, and telephony in one integration meant I could focus on the actual logic rather than stitching together three different services.

The tradeoff is real though. Production systems handling natural back-and-forth conversation would need the streaming approach.

The Hard Part: Data Extraction

Here’s what I underestimated: extracting structured data from IVR responses is genuinely difficult.

My mock IVR returns something like: “Your prior authorization for CPT code 99213 is approved through June 30th, 2026.”

Easy enough to regex, right? But real insurance companies don’t speak from the same script:

- “Authorization valid until 6/30/2024”

- “Approved. Expires on the thirtieth of June”

- “This authorization is active through end of Q2”

- “Approved with end date June 30”

Every payer phrases it differently. I spent more time than I’d like to admit tweaking regex patterns for my controlled mock environment. In production, pattern matching per payer becomes a maintenance nightmare.

The solution is obvious in retrospect: use an LLM for semantic extraction. Send the transcript to Claude with a schema, let it handle the variation. Instead of maintaining regex patterns for 50 different payers, you maintain one prompt that says “extract the authorization status, effective date, and expiration date from this IVR response.”

What Production Looks Like

The POC proves the concept. Production would swap out several components:

| Component | POC | Production |

|---|---|---|

| Speech-to-Text | Twilio built-in | Deepgram (medical vocabulary) |

| Text-to-Speech | Twilio Polly | ElevenLabs (natural voices) |

| Data Extraction | Regex | Claude API |

| Pipeline | Webhook | Pipecat streaming |

| Navigation | Hardcoded flow | Config-driven per payer |

The last row is the interesting one. Each insurance company has different menu structures, different prompts, different response formats. You’d need configurable “navigation profiles” - essentially a state machine definition per payer that tells the agent: “When you hear ‘for prior authorization press 2’, press 2. When asked for member ID, speak the 10-digit ID.”

This is where the real product complexity lives. The voice AI part is increasingly commoditized. The value is in the payer-specific configurations and the ability to handle the long tail of edge cases gracefully.

Closing Thoughts

The technology to automate insurance phone calls exists today. Deepgram handles medical terminology well. ElevenLabs produces voices that don’t sound robotic. LLMs can extract structured data from messy transcripts.

The ROI is obvious: a single patient access rep might spend 2-3 hours daily on hold with insurance companies. Multiply that across a health system and you’re talking about meaningful labor costs on tasks that add zero value.

The question isn’t whether this will happen, but who builds it and how fast the payers adapt. Some will probably start offering APIs once they realize the alternative is thousands of AI agents clogging their phone lines.

If you’re working on similar problems in healthcare automation, I’d love to chat. Find me on Twitter at @karancito.